While I was putting together my post on how I manage my picture workflow and archiving, it got me thinking about an even larger problem, the archiving and workflow of the production server here at Empressr and Fusebox. This post I’m going to talk about archiving workflow.

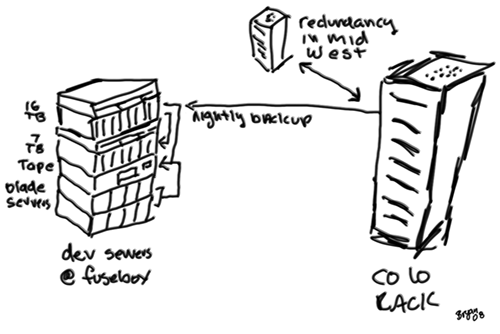

At Fusebox we have a climate controlled server room with about 3 racks of assorted machines, these are all used for development and staging of applications and Web sites. We have a couple of co-lo racks in a hosting facility here in the greater NYC area and a redundant facility located in a top secret location somewhere in the middle of the US.

First the production environment, we do incremental backups from our rack to our office thru a VPN tunnel every evening, these backups go to a partition on our development server that has restricted access. This partition is synced to another partition on a different RAID and that is backed up to tape. Then, whatever service that falls into needing redundancy (or disaster recovery) is synced to the respected server at our top secret location, yes somewhere in the midwest.

So then on to our development environment, at a high level, we use a combination of Max OS X. Windows and flavors Unix in this environment, so back up gets tricky. All the front end graphics, images, video gets stored on a Mac Xserver that is hooked to a 16TB RAID 5 storage unit. That gets synced on a nightly basis to a Xserver with a 7 TB RAID. The Unix machines also back up to the 16TB RAID. Then this all gets written to tape as a nightly incremental backup, some of the windows machine get backed up directly, some thru a similar process as the Unix boxes.

I find this to be a tedious and expensive process and really want need to change the way we archive. Tape seems too archaic (and unreliable), backing up to the cloud is to expensive, my only thought right now is to have redundant RAIDs making backups of data.

Any thoughts are greatly appreciated, oh and another drawing, this one is a bit sketchy, as I only spent 5mins doing it,